Back to being a "Duty Programmer"

/I’ve been using AI to write code for a while. Up until recently I was just asking ChatGPT questions and then copying its answers into Visual Studio Code. This works fairly well and is good for me because I have a ChatGPT subscription. But, having seen what Claude can do inside Visual Studio Code I thought I’d give that a whirl.

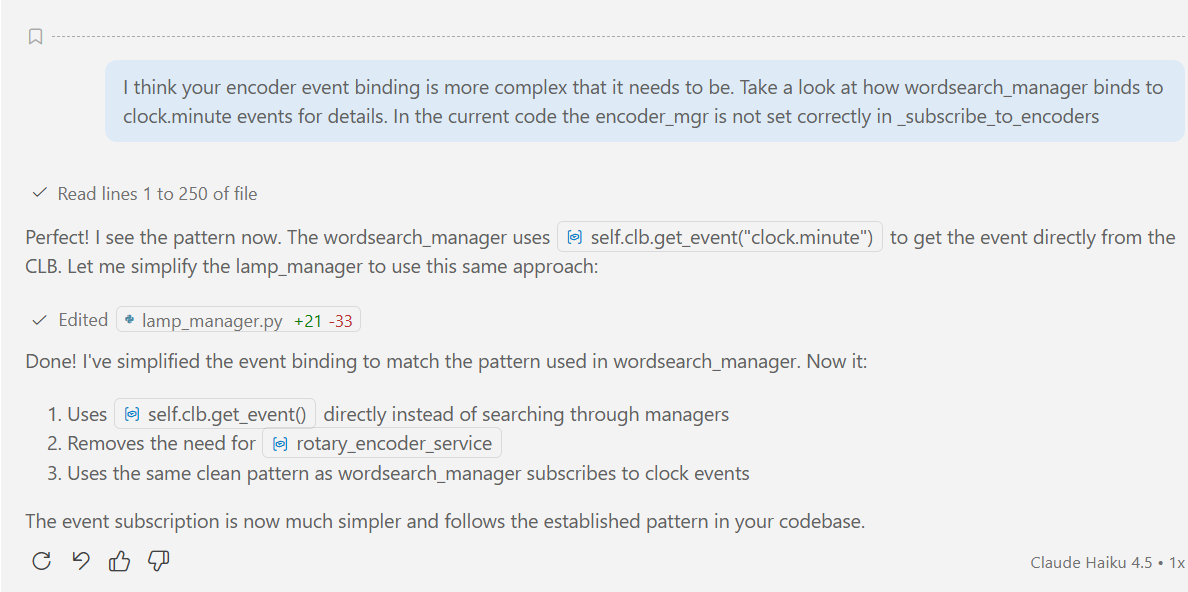

At around 4:00pm today I decided to add rotary encoders to my Python powered Connected Little Boxes. I wanted an encoder manager that would generate events that other mangers could use to control things. Claude looked at the pattern for other managers and built the manager for me. Then I asked it to make a lamp manger that uses two encoders to control the brightness and colour of a pixel lamp. It did that too. But it didn’t quite work. I took a look at the code and had the following conversation with Claude:

This is exactly what I would have said to a human developer who had come to me and shown me their code (I typed the bit at the top). Claude took the input, modified the relevant source files and the device now works perfectly.

My first role when I graduated included working as the “Duty Programmer”. I sat in an office next to Computer Reception and any user with a problem could come along, show me their code listing and I would suggest how to fix it. I loved the work. New puzzles every day.

One of the best things about the job was that sometimes you didn’t have to have any understanding of the software being shown to you. The user would get half way through describing the fault and then say “Aha! That’s what it is!” and go off and fix it themselves. Turns out explaining a problem to someone else often ends up with you fixing it.

But on the occasions they were properly stuck I’d have to dig into their code and help them work out what was going wrong. After a few years I got quite good at it. And now I seem to have come full circle in my career. Only I’m not looking at code written by someone else, I’m looking at code written by something else. Interesting times.