There’s always a moment in a spy film when someone says “I’ll just hack into their network and reconfigure it….” Today I did this for real. I suppose I was hacking my own network, but it still felt a bit like James Bond.

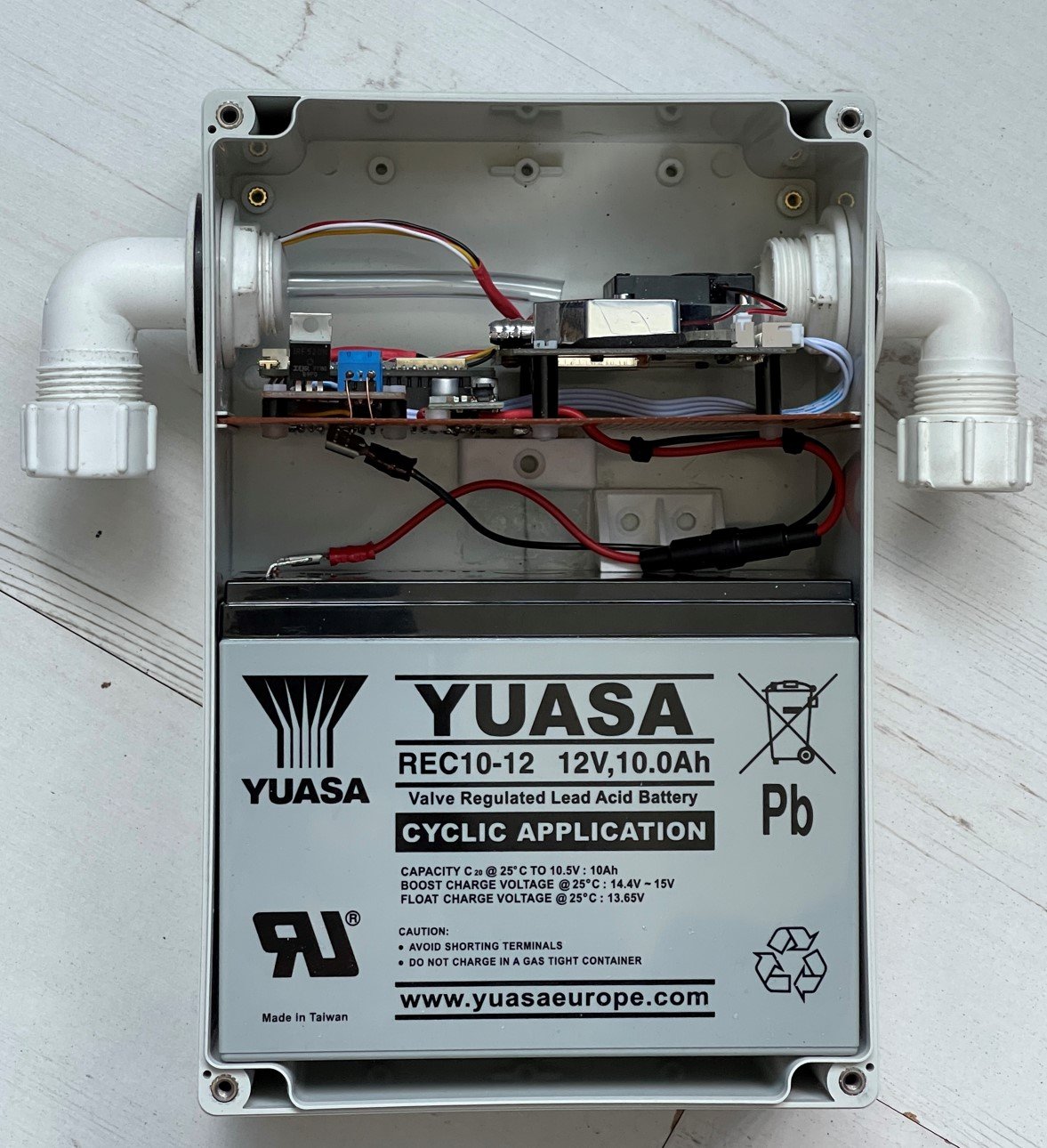

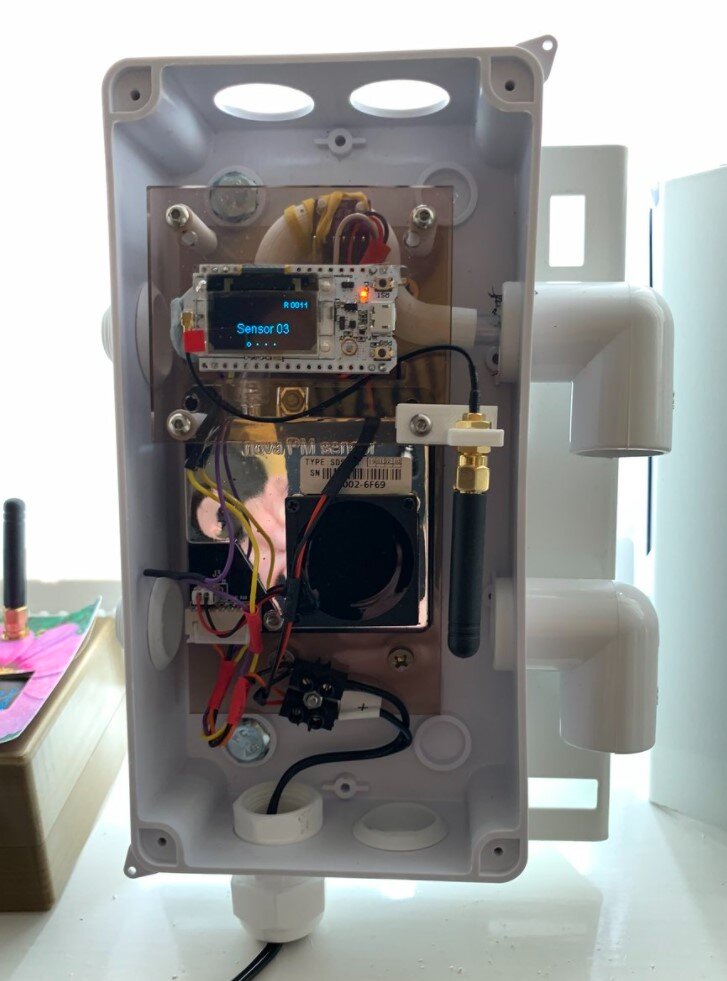

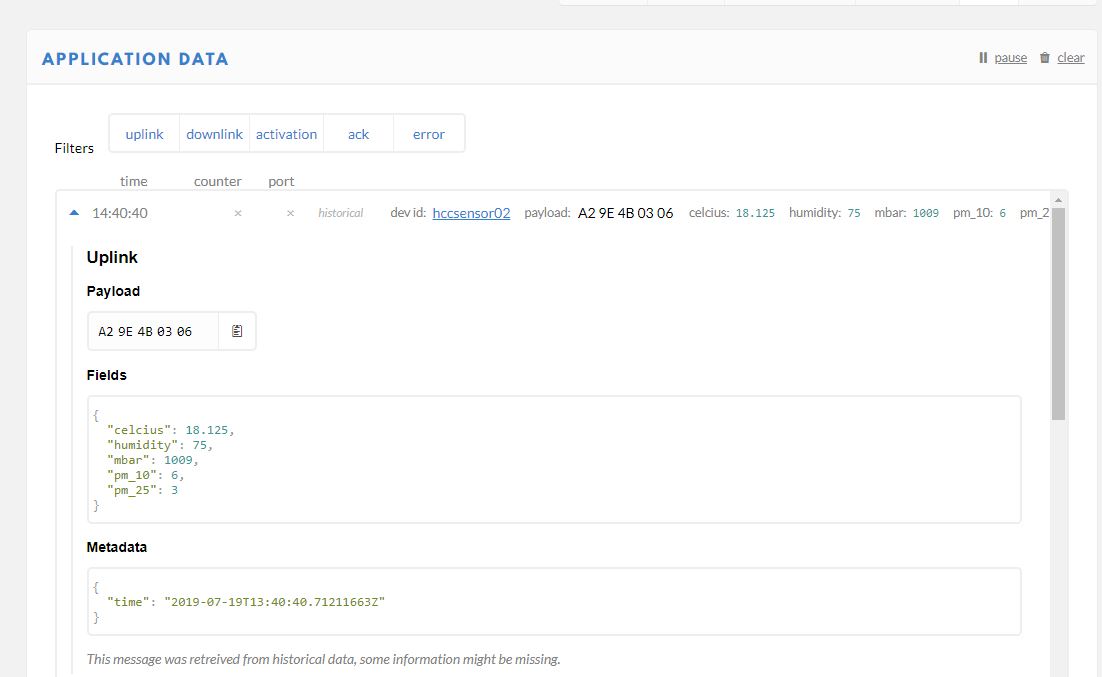

I was fixing one of our sensors on our Connected Humber network. These all use MQTT to send readings back to the server which then displays them on a map. The problem was that when I set up the sensor I’d set the publish topic incorrectly, so the readings were being sent to the wrong place. I needed to change that topic remotely.

Fortunately my sensor code can accept configuration commands, so this should have been an easy fix. Just send the appropriate message. The snag was that once the sensor has sent a reading it then goes into a deep sleep to save power. So it is hardly ever around to hear any messages. However, I’d built a delay into the software so that it stays running for a couple of seconds after it has sent a reading.

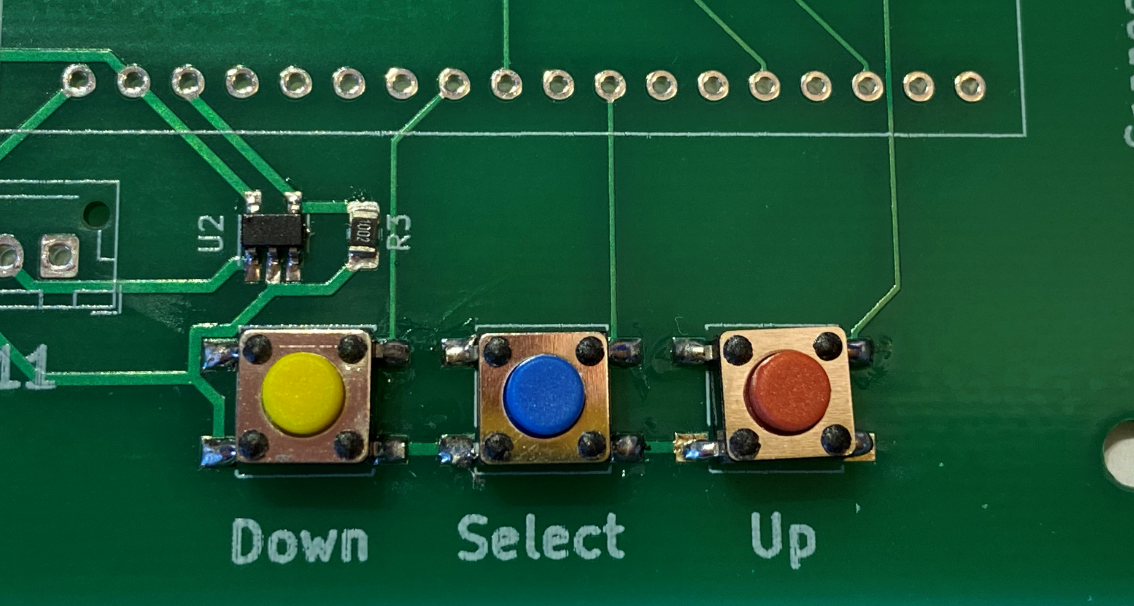

All I had to do was wait until I saw a message and then quickly send the configuration command. This seemed like a bit of a waste of time, and at my age there is no guarantee that I’d have the required ninja reactions that would allow me to send the message in time. So I wrote a bit of Python instead.

The program turned out to be simple enough. It just waits for an incoming message and then sends one straight out as soon as it sees it. The command that I sent was “Don’t go to sleep after each transmission”. Then I was able to configure the MQTT correctly, check a few other settings and finally put the device to sleep when I’d done it. And I really did feel a bit like a hacker in a spy movie while I was doing this.

I think I’ll build this out into a proper command transfer program. LoRa powered sensors work in exactly the same way, in that a LoRa device only listens for incoming messages after it has said something.